Value-added teacher assessment has been a mantra for education “reformers” throughout the debate over Race to the Top. We’ve got to evaluate teachers and make hiring and firing decisions on the basis of real student performance measures – you know, like businesses – like the real world does! (A highly questionable assumption indeed – AIG bonuses anyone?).

I address the technical issues with value-added assessment of teachers here, indicating just how premature these assertions are from a technical standpoint.

https://schoolfinance101.wordpress.com/2009/11/07/teacher-evaluation-with-value-added-measures/

At present, good value added measures are little more than a really cool (if not totally awesome) research tool, but most of the best analyses of value-added as a tool for teacher evaluation suggest that even in the best of cases there still exist potentially problematic biases.

Let’s set these technical issues aside for now and explore some practical issues. For example, just how many teachers in a public education system could even be evaluated with value-added assessment? Consider these constraints.

- Most states, like New Jersey, implement yearly assessments in grades 3 through 8, and perhaps end of course or some HS exit exam. (I’ll set aside concerns over the fact that annual, rather than fall-spring assessment captures vast differences in summer learning which play out by student economic status – advantaging some teachers and disadvantaging others, depending on which kids they have).

- In most cases, the established and more reliable tests exist only in language arts and math, though some states have implemented science and/or social studies tests which are arguably less cumulative.

- The most reliable VA assessment of teachers occurs where there exist multiple points of historical scores on students prior to the observed teacher (smaller technical point). This really casts doubt on the usefulness of VA assessment for evaluating teachers who have kids in their first few years of being assessed (grades 3 and 4 in NJ and many states).

- by the time a student hits middle school, they typically interact with multiple teachers who may have simultaneous influences on each others’ content area success. Even if we ignore this, at best we can look at the language arts and math teachers in the middle school setting.

- you have to jump over those untested grade 9 and 10 students and their teachers. If we have end of course exams, we don’t know what the beginning of course status necessarily was – at least in a VA modeling sense.

So, here is a listing of the certified staffing in New Jersey (below) in 2008 based on their grade levels and areas of teaching. The list does not include everyone, but does capture the main assignment (JOB Code 1) for the vast majority of school assigned teaching (and principal) personnel.

What this list shows us is that in the best possible case, in a state with annual Grades 3 to 8 assessment and shifting to end of course exams, we might be able to generate VA estimates of effectiveness for about 10% or 20% (just saw that “ungraded elementary” group) of the teachers. That is, 10% (up to 20%) would be subject to a different evaluation system than the rest. In fact, nearly 50% of teachers would be infeasible to evaluate at all. Indeed they are an important 10% (or perhaps 20%).

Okay, so maybe this would create incentive for the real gunners in the mix of potential teachers to dive into those areas evaluated by VA. There exists an equal if not stronger possibility that the real gunners in the mix of potential teachers will avoid those classrooms of kids, schools or districts where – in the evaluated content areas and grade levels – they face an uphill battle to improve outcomes (hopefully, some will welcome the challenge).

There are some obvious solutions to this dilemma –

- Test everything, every year by cumulative measures, fall and spring. Okay. That seems a bit absurd, but it might be a good economic stimulus for the testing industry. I still struggle with how we would evaluate teachers in supporting roles, as many of those listed below or teachers in the Arts and Music (perhaps applause meters… but only if we measure applause gain from concert to concert, rather than applause level?). What about vocational education?

- Just dump all of those teachers and all of that frivolous stuff kids don’t really need and assign each group of kids a 12 year sequence of reading and math teachers. Some have actually argued that this really should be done, especially in higher poverty and/or underperforming schools. Why, for example, should a school with inadequate math and reading scores offer instrumental music or advanced math or journalism courses? (Put down that saxophone and pick up that basic math book Mr. Parker!) The reality is that high poverty and underperforming schools in New Jersey and elsewhere already have concentrated their teaching staff on core activities to the extent that kids in poor urban schools have much less access to arts and athletics.

I personally have significant concerns over the idea that poor urban kids should have access to a string of remedial reading and math teachers over time and nothing else, but kids in affluent neighboring suburbs should be the ones with additional access to foreign languages, tennis and lacrosse teams and elite jazz ensembles (this one really irks me) and orchestras. Quite honestly, successful participation in these activities is highly relevant to college admission – at least at the competitive schools. Certainly, the affluent communities are not going to go along with dumping all of these things.

So, if we can’t test everything every year and if it is offensive to argue for dumping all areas that aren’t or can’t reasonably be evaluated, then we have a significant gap in the usefulness of VA teacher assessment.

I did this tally very quickly using 2007-08 NJ staffing files. Feel free to tally and re-tally and post alternative counts below. Note that most of the special education teachers are missing from the tally below because I’ve not yet fully recoded them for 2008. While I have done so for earlier years, those years of the staffing files don’t break out content area for MS teachers or grade level for elem teachers. About 14% of teachers in 2005 or 2006 data were special education. At a maximum, I get to about 20% of teachers as ungraded elementary and about another 5% or so potentially relevant in 2005 and 2006 for VA assessment (without ability to remove untested grades).

| Main Assignment | Number of Teachers | % of Teachers | Potentially Reliable VA Assessment | No Assessment at All |

| Art | 3,106 | 2.84 | X | |

| Basic Skills | 1,779 | 1.63 | X | |

| Bilingual | 697 | 0.64 | X | |

| Computer | 917 | 0.84 | X | |

| Coord/Director | 1,263 | 1.15 | X | |

| Counselors | 29 | 0.03 | X | |

| Elem English | 522 | 0.48 | ||

| Elem Math | 535 | 0.49 | ||

| Elem Science | 381 | 0.35 | ||

| Ungraded Elem | 11,308 | 10.33 | ? | |

| ESL | 1,700 | 1.55 | X | |

| FCS | 837 | 0.76 | X | |

| Grades 1 to 3 | 12,006 | 10.97 | ||

| Grades 4 to 6 | 7,012 | 6.41 | X | |

| Grades 6 to 8 | 1,305 | 1.19 | ? | |

| HS English | 13 | 0.01 | ||

| HS English | 5,041 | 4.61 | ||

| HS Math | 4,727 | 4.32 | ||

| HS Science | 4,391 | 4.01 | ||

| HS Soc Studies | 3,968 | 3.63 | X | |

| HS World Language | 4,460 | 4.08 | X | |

| Indus Arts | 1,217 | 1.11 | X | |

| Kindergarten | 321 | 0.29 | X | |

| Kindergarten | 3,565 | 3.26 | X | |

| MS Lang Arts | 2,844 | 2.6 | X | |

| MS Math | 2,439 | 2.23 | X | |

| MS Science | 1,669 | 1.53 | ? | |

| MS Soc Studies | 1,629 | 1.49 | ? | |

| MS World Language | 440 | 0.4 | X | |

| Music | 3,665 | 3.35 | X | |

| PE | 6,963 | 6.36 | X | |

| Perf Arts | 222 | 0.2 | X | |

| Preschool | 1,052 | 0.96 | X | |

| Preschool | 557 | 0.51 | X | |

| Principal | 2,172 | 1.98 | ? | |

| Psychologist | 1,545 | 1.41 | X | |

| SC Spec Educ | 163 | 0.15 | X | |

| SC Spec Educ | 6,747 | 6.17 | X | |

| SE RR/Inclusion | 963 | 0.88 | X | |

| Supervisor | 2,360 | 2.16 | X | |

| Vice Principal | 1,828 | 1.67 | X | |

| Voc Ed | 1,067 | 0.98 | X | |

| Total | 109,433 (of about 142,000 recoded) | 11.24 | 47.01 |

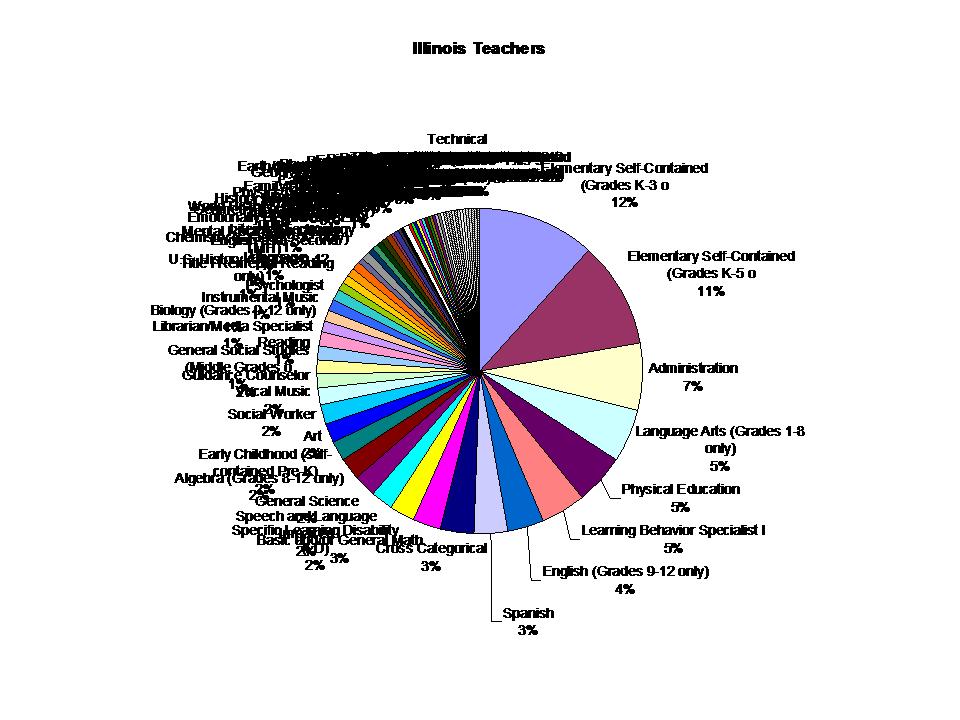

Okay – So New Jersey is just probably a wacky inefficient example that has way too many of those extra teachers in trivial and wasteful assignments. Well, here’s the breakout of Illinois teachers for 2008.

I could go on, and do this for Missouri, Minnesota, Wisconsin, Iowa, Washington and many others showing generally the same pattern. I chose New Jersey above because the most recent years of NJ data actually break out the grade level assignment of most elementary teachers so we can see how many grades 1 through 3 teachers would fall outside the evaluation system.

My point here is not to try to trash VA evaluation of teachers, but rather to point out just how little – even in a practical sense – the pundits who are pitching immediate action on using VA for hiring and firing teachers and providing incentive pay have bothered to think about even the most basic issues. Not the technical and statistical issues, but really simple stuff like just how many teachers would even be evaluated under such a system. And more importantly, since this is supposedly about “incentives” – just what kind of incentives this selective evaluation might create.

One thought on “Pondering the Usefulness of Value-Added Assessment of Teachers”

Comments are closed.